Task: Multi-modal medical image fusion

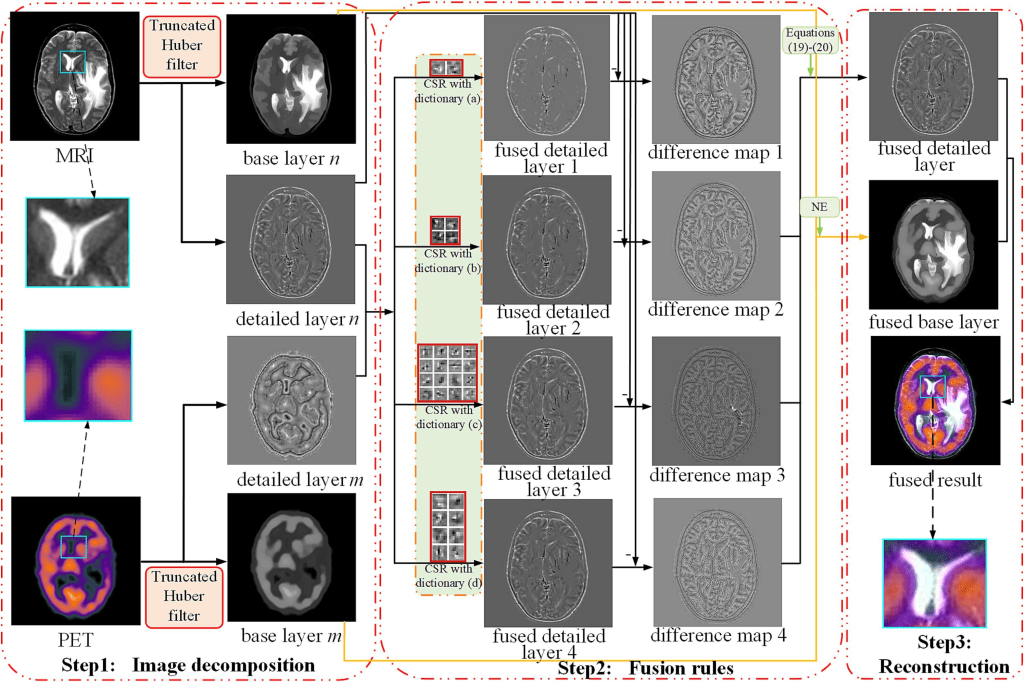

Description: The model is comprised of a novel medical image fusion algorithm based on multi-dictionary convolutional sparse representation (CSR) for retaining useful information while achieving noise robustness.

- First, truncated Huber filtering is first introduced to achieve detail-base layer decomposition of source images.

- Subsequently, multiple-dictionary decisions and nuclear energy-based rules are proposed to fuse the details and base layers, respectively. The fused image is reconstructed by synthesizing the fused detail and base components.

The proposed medical image fusion rule exploits the advantages of CSR in implementing sparse representation (SR) on global images and improves the accuracy of fusing detailed layers using multiple dictionaries to simultaneously constrain and decide the pixel selection of different detailed layers. For base layer fusion, a nuclear-based energy fusion rule is proposed that simultaneously combines

- the advantages of the local energy operator in effectively preserving energy and contrast information, and

- the performance of the nuclear norm operator in preserving the texture and structure in the base layer.

This rule can fully extract useful information from the base layers, effectively avoiding residual texture information in the base layer, and suppressing the generation of blurred edges and the pseudo-Gibbs effect. The main contributions of the proposed model and its reference evaluation study are:

- a medical image fusion method based on a multi-dictionary and truncated Huber filter. To the best of the model developers knowledge, this is the first time the truncated Huber filter has been applied in medical image fusion.

- a detailed layer fusion strategy based on a multi-dictionary CSR to detect the global structure and detailed information in medical images.

- a base layer fusion rule based on nuclear energy to preserve the energy and structural information.

- qualitative and quantitative evaluations via extensive experimental results confirming that the proposed method outperformed the state-of-the-art methods in both noise-free and noise-perturbed fusions.

The model implements a medical image fusion scheme based on multiple dictionaries and truncated Huber filtering (MDHU). The proposed MDHU method is summarized in Algorithm 1, and the general framework is shown below.

The truncated Huber filter is introduced to decompose medical images into base and detailed layers to fully identify and transfer useful information. Fusion rules are designed to acquire the corresponding fused layers according to the features of the two layers. The detailed layer fusion rule exploits the advantages of CSR in achieving SR globally while combining multiple-dictionary constraints to improve the retention of detailed information. For the base layer fusion rule, a nuclear energy (NE) fusion rule is developed to fully extract the energy and contrast information from the base layer. The final fusion result is obtained by reconstructing the fused detail and base layers.

Validation: Tests were performed on 90 pairs of publicly available datasets, including 29, 34, 9, 8, and 10 groups of MRI-PET, MRI-SPECT, CT-MR_T2, MR_T2-MR_Gad, and MR_T1-MR-T2 images, respectively. All test data were 256 x 256 pixels and are publicly available.

The proposed approach was compared with 13 state-of-the-art methods:

- energy-attribute-based fusion (MSMG),

- multilevel edge retention scheme (MLMG),

- Laplace-filtering-based (LFD) [9],

- coupled-dictionary learning (CFL),

- Laplacian redecomposition-based scheme (LRD),

- a method based on a pretrained neural network (LMF),

- an approach based on GANs (DDcGAN),

- a scheme based on a compression and decomposition network (SDNet),

- an approach based on fully CNN (IFCNN),

- a unified end-to-end scheme (U2Fusion),

- a network based on enhanced information retention (EMFusion),

- multiscale adaptive transformer (MATR),

- and correlation-driven fusion (CDD).

For fairness and accuracy of the experiments, the parameter settings of all comparison methods were consistent with those in the corresponding references, and the codes were provided by the corresponding authors. The quality of different fusion results is difficult to judge with human eyes; therefore, both subjective and objective evaluations were conducted. The accurate assessment of the advantages and disadvantages of fused results is difficult using a single metric considering the different image attributes. Six metrics covering four different evaluation perspectives were used.

- QMI quantitatively evaluates the reliance between the source and fused images.

- QNCIE is designed based on the nonlinear correlation coefficient between discrete variables;

- QG measures the amount of gradient information transferred to the fused image;

- QM measures the edge retention of the fused image from the source image;

- QS evaluates the structure retention of the fused result; and

- QCB measures the quality of the fused result through simulating human perceptual reflection.

In addition, the gray-level covariance matrix (GLCM) and signal-to-noise ratio (SNR) metrics were utilized to measure the degree of image texture retention and noise robustness, respectively. Each metric positively correlates with the quality of the fused images.

Results: The proposed model effectively fuses the source global structure and texture information exhibiting strong robustness against noise. Experiments involving extensive noise-free and noisy anatomical and functional medical image fusion on a public dataset covering five fusion categories demonstrate that the proposed method outperforms other state-of-the-art methods in subjective and objective evaluations.

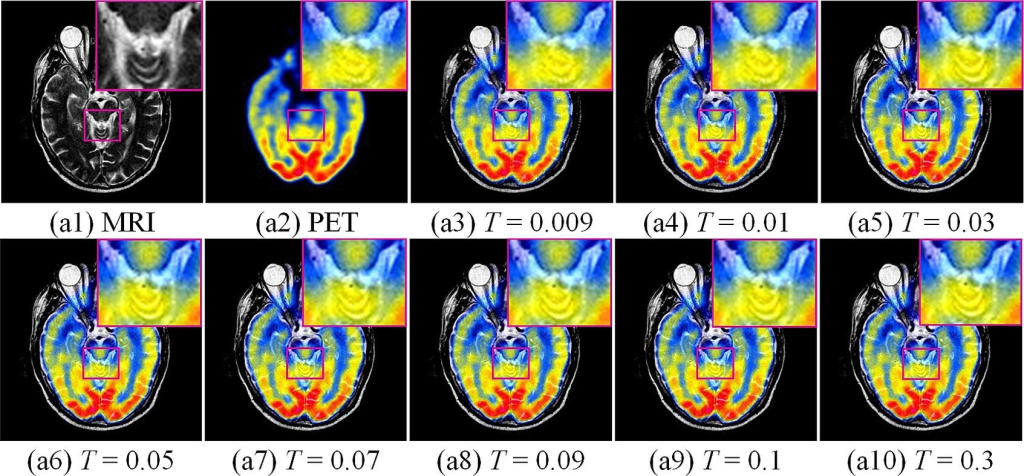

Considering MRI-PET images as examples, a control-variable approach was adopted to analyze two important parameters in the model: threshold T and threshold v . T and v have a significant impact on the quality of the fused result in the fused detailed and base layers, respectively. Other parameters that have less influence on the proposed model were provided directly. The figure below show the results of the subjective and objective evaluations of a set of MRI-PET images with the magnified images located in the upper-right corner. When T was less than 0.03, significant detailed information was lost. By contrast, when T was greater than 0.03, there was a slight difference in the detail retention of the fused images.

Claim: A multi-modal medical image fusion model is introduced based on multi-dictionary and truncated Huber filtering to overcome the potential loss of features in existing methods. Furthermore, a nuclear energy-based rule was proposed to fuse the base layers, effectively detecting the local energy and large structural information. Moreover, MDHU was robust in noise-corrupted fusion problems. Extensive experiments demonstrated that MDHU outperformed certain advanced methods. The ample experimental dataset and proper parameter selection techniques, resulted in a model of reliable and clinically reproducible performance, with important practical implications for the identification of diseases such as Alzheimer’s disease and cavernous hemangiomas.

Although the proposed MDHU has excellent fusion performance, there is room for further improvement. Noise interference is inevitable while capturing medical images. However, the proposed MDHU is only robust to noise and can hardly completely remove it. Therefore, next improvements will focus on enhancement of the model’s clinical practicability. In the future, the model’s ability to promote tumor segmentation will be assessed, to further enhance its auxiliary role in the diagnosis of specific diseases

Data availability statement: Data will be made available on request